A new study published by Maxim Bazhenov Lab at UC San Diego found that replicating sleep in spiking neural networks allows for these networks to learn new tasks without forgetting old ones.

Artificial neural networks contain multiple layers of interconnected computer-simulated neurons. According to IBM, the most basic ANNs have 3 layers: an input layer, a hidden layer, and an output layer. ANNs can operate through communication between the different neurons, and depending on what signals are received, a neuron may or may not transmit the signal to the next node.

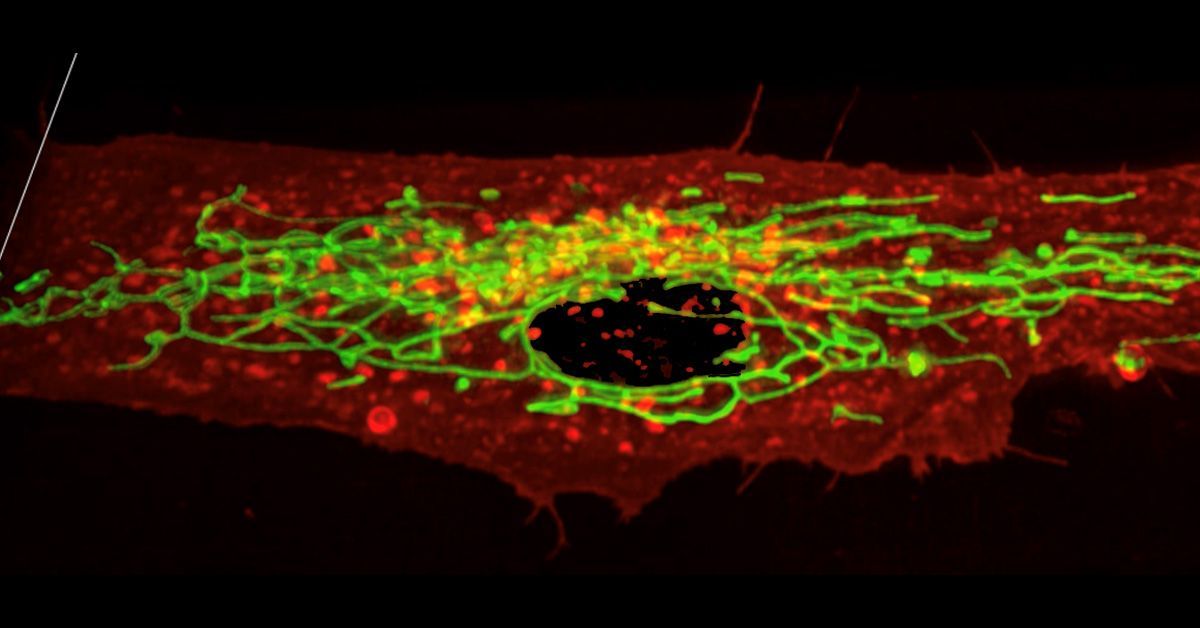

In this study, the authors used spiking neural networks. While the SNNs in this study have the same structure as an ANN, they are more similar to human neurons by communicating continuously through discrete electric events known as spikes. SNNs take into account the timing of the signals while ANNs do not.

One of the major problems experimenters have while using SNNs is that they can only retain the ability to solve one problem or complete one task at a time. For example, the SNN in this study was trained to recognize patterns. If an SNN is taught to solve two types of problems in sequence, it will only retain the ability to solve the second type of problem. The first task is overwritten in favor of the second task. This is called “catastrophic forgetting”.

This problem can be solved by simultaneously teaching two tasks at once, through the concurrent use of the training data sets for both tasks. While improving what SNNs are capable of, this method does not replicate the continuous learning of the human brain. Humans are able to learn many tasks in sequence without forgetting how to do previous activities.

Fourth year PhD student and co-author, Erik Delanois, provided this analogy: “If you spend some time learning how to play golf and you take a break and learn how to play tennis, you’re not going to forget how to play golf just because you learned how to play tennis”.

The researchers found that after a first task is learned, a second task can be learned if the learning period is mixed with periods of “sleep.” More specifically, the researchers developed and applied a model for REM, or rapid eye movement sleep. This type of sleep has been implicated in the consolidation of procedural memories.

After the neurons learned one task, sleep was interwoven with the learning of a second task. Theoretically, this allows the SNN to replay the old task while concurrently learning the new task. At the end of the training period, the SNN was able to complete both tasks without any catastrophic forgetting and provided new insights to how REM sleep contributes to memory consolidation.

“It helps to visualize and understand what synapses could potentially be doing in biology. It’s a good example and illustration of what’s going on under the hood,” Delanois said.

The lead authors of the article include Delanois and Ryan Golden, Pavel Sanda from the institute of Computer Science of the Czech Academy of Sciences, and UCSD Professor Maxim Bazhenov.

Art by Ava Bayley for the UCSD Guardian.

JeffLawson • Nov 25, 2023 at 1:41 am

Overall, this article is exceptional piece of writing that informs, inspires, and leaves a lasting impact on the reader.

Sloboda Studio • Jan 10, 2023 at 7:38 am

Thanks for you job! In several fields, artificial neural networks are capable of superhuman performance. Despite these developments, these networks do not succeed at sequential learning; instead, they excel at more recent tasks at the expense of those that were previously learned. Animals and humans, on the other hand, both have a great capacity for lifelong learning and knowledge enlargement. By facilitating the spontaneous reactivation of previously established memory patterns, sleep has been proposed to play a significant role in memory and learning. Here, we show that interspersing new task training with sleep-like activity optimizes the network’s memory representation in synaptic weight space to prevent forgetting old memories. The model we use for this is a spiking neural network, which simulates sensory processing and reinforcement learning in animal brains. This is due to sleep. This is made possible by sleep repeating previous memory traces without explicitly using the previous task data.

world of mario • Dec 28, 2022 at 11:51 pm

I have a great feeling when I read your articles. Love Ucsdguardian

Betty • Dec 8, 2022 at 2:56 am

Thanks a lot for an interesting article. I am also very interested to create an app that can help people to improve their health. And I started searching for software development companies and I found the right one that can help me with that. So, I hope soon I can share with everybody my product.

Maria • Dec 6, 2022 at 8:36 am

Do Work At your Home And Make Money From Home .,..

Open This Link …>http://www.richsalaries4u.blogspot.com/

eva • Dec 5, 2022 at 12:09 pm

Excellent work, Mike. I admire your work since because to one simple online job, I’m now making over $35,000 each month! I am aware that you are currently earning a sizable sum of money online from a starting capital of $28,000.

simply click the link——————————>>> https://splendorousbolone.netlify.app

Barbara Blankenchip • Nov 30, 2022 at 7:27 pm

I’m wondering if you are trying to have this program solve multiple problems at a time? The human brain seems to solve one task / problem at a time, are you trying to get this program to solve multiple problems/tasks consecutively? And if so, would sleep help the human brain do the same? So exciting